Unit Testing in Visual Studio 22 with Copilot Agent Mode and TDD

Visual Studio recently added agent mode into their Copilot offering. This feature has existed for some time in VSCode, so I was excited when this was added to Visual Studio as well. As more code is written with Agents, I wanted to try out Test Driven Development in tandem with an Agent. I have noticed that often it takes many revisions when working with generated code to get exactly what I am looking for, and that can end up being expensive in terms of time and tokens. My goal is to get a good workflow that I can use to maximize the value that agentic coding can bring. Utilizing TDD, and the principle of Red Green Refactor (RGR), I’m hoping that I can find an efficient way to develop quality code faster. Here I will walk through a simple example with unit tests. Most developers have had to do a FizzBuzz implementation at some point, so I will be using that as an example here.

Getting Started

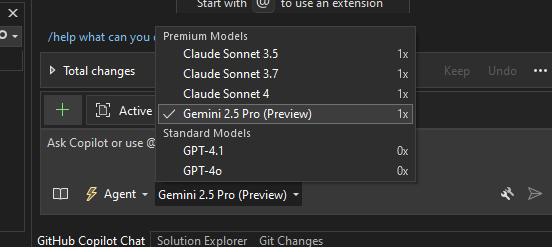

With agent mode and Copilot Pro, there are a few new options available. I have been using the Premium models as of late, specifically Gemini 2.5 Pro. I will be using this model with the basic built in tools available for this example.

Various libraries exist for unit testing in dotnet, I will be using the following:

- xUnit for unit testing

- Shouldly for assertions

- NSubstitute for Mocking

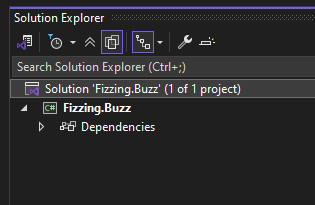

Project Set Up

The initial project is set up with just one single dotnet core C# library project. This is meant to mimic an existing project. I want to go through the process of adding a new class with a new feature, so first I will ask the agent to do so. Being as specific as possible with the prompts is important or sometimes the agent can get its own ideas.

Here is the prompt to set up the implementation class

Create a new class in the Fizzing.Buzz project. Call it BuzzChecker. Add a new method in the class called verify that takes an int and outputs a string. Do not implement this class.

Prompt

//Generated Class

public class BuzzChecker

{

public string Verify(int number)

{

throw new NotImplementedException();

}

}

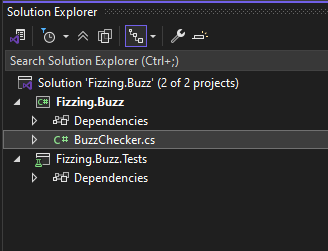

Now that we have a class to start with, I set up a project for testing by giving it the following prompt. I had to run it twice because the first time it got caught up on trying to create a new solution for both projects. Specifying that I would be adding it helped out.

Create a new xUnit testing project called Fizzing.Buzz.Tests and add it to the Fizzing.Buzz solution. Reference the Fizzing.Buzz Project. Add nuget packages for Shouldly and NSubstitute. Do not add any tests and remove any default tests. Ensure the entire solution compiles.

Prompt

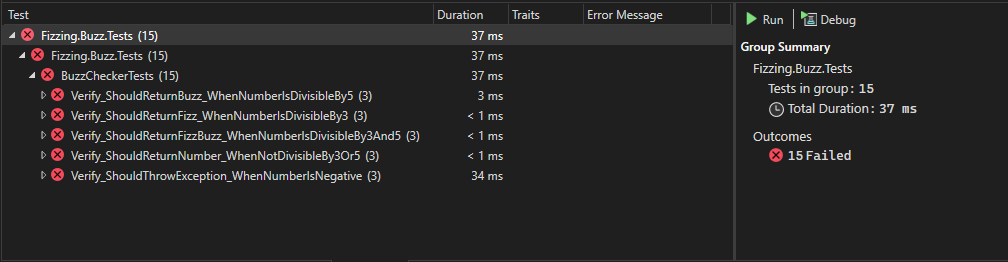

Creating the Unit Tests

Now that we have the new class in place, I want to make sure that the generated code is verified against my requirements. I will tell the agent to create tests only for the class, and verify that they are currently failing. This would be the Red step in RGR.

I want to do TDD to develop this feature, so write the unit tests first, verify that they are compiling and not passing. Do not implement the feature. Create a set of unit tests for the method Verify of the class BuzzChecker. The tests should use xUnit and the Arrange Act Assert pattern. Use Shouldly for assertions. An exception should be thrown if negative numbers are passed in. If the number is divisible by 3, it should return “Fizz”. If the number is divisible by 5, it should output “Buzz”. If the number is divisible by both 3 and 5, it should return “FizzBuzz”. For all other integers, it should return the value as a string.

Prompt

The generated test class:

public class BuzzCheckerTests

{

private readonly BuzzChecker _buzzChecker = new BuzzChecker();

[Theory]

[InlineData(-1)]

[InlineData(-5)]

[InlineData(-100)]

public void Verify_ShouldThrowException_WhenNumberIsNegative(int negativeNumber)

{

Should.Throw<ArgumentException>(() => _buzzChecker.Verify(negativeNumber));

}

[Theory]

[InlineData(3)]

[InlineData(6)]

[InlineData(9)]

public void Verify_ShouldReturnFizz_WhenNumberIsDivisibleBy3(int number)

{

var result = _buzzChecker.Verify(number);

result.ShouldBe("Fizz");

}

[Theory]

[InlineData(5)]

[InlineData(10)]

[InlineData(20)]

public void Verify_ShouldReturnBuzz_WhenNumberIsDivisibleBy5(int number)

{

var result = _buzzChecker.Verify(number);

result.ShouldBe("Buzz");

}

[Theory]

[InlineData(15)]

[InlineData(30)]

[InlineData(45)]

public void Verify_ShouldReturnFizzBuzz_WhenNumberIsDivisibleBy3And5(int number)

{

var result = _buzzChecker.Verify(number);

result.ShouldBe("FizzBuzz");

}

[Theory]

[InlineData(1)]

[InlineData(2)]

[InlineData(4)]

public void Verify_ShouldReturnNumber_WhenNotDivisibleBy3Or5(int number)

{

var result = _buzzChecker.Verify(number);

result.ShouldBe(number.ToString());

}

}

Generating the Feature Code

The next step is to satisfy that the tests are running with generated code. Telling the agent to now implement the code in the correct place should yield a solution. I want it to verify that this is satisfying the requirements, and I want to verify as well. We want to get all tests to Green.

Implement the feature for the BuzzChecker Verify Method. An exception should be thrown if negative numbers are passed in. If the number is divisible by 3, it should return “Fizz”. If the number is divisible by 5, it should output “Buzz”. If the number is divisible by both 3 and 5, it should return “FizzBuzz”. For all other integers, it should return the value as a string. Verify that all tests are passing after implementation, and adjust code until solution compiles and all existing tests pass.

Prompt

Updated BuzzChecker class with implementation:

public class BuzzChecker

{

public string Verify(int number)

{

if (number < 0)

{

throw new ArgumentException("Number cannot be negative.", nameof(number));

}

if (number % 3 == 0 && number % 5 == 0)

{

return "FizzBuzz";

}

if (number % 3 == 0)

{

return "Fizz";

}

if (number % 5 == 0)

{

return "Buzz";

}

return number.ToString();

}

}

Refactoring the code with the Agent

Now that we have working code, we may want to work with the agent to get the code in a preferable state. In some cases, readability is prioritized over clever code for maintenance. In other cases, we may want to have short and efficient code. The code first created by agents is often pretty verbose, so in this case I want to refactor it to be more concise while still satisfying all of the requirements.

Refactor the BuzzChecker Verify Method. I would like the code to be more concise yet readable. Run the tests and make sure that all tests pass and the solution compiles after refactoring.

Prompt

Refactored BuzzChecker class:

public class BuzzChecker

{

public string Verify(int number)

{

if (number < 0)

{

throw new ArgumentException("Number cannot be negative.", nameof(number));

}

return (number % 3, number % 5) switch

{

(0, 0) => "FizzBuzz",

(0, _) => "Fizz",

(_, 0) => "Buzz",

_ => number.ToString(),

};

}

}

Adding a Service

Typically, as a system grows, you find ways to eliminate code reuse (DRY) or abstract logic. This often isn’t apparent at design time and can be added in as features are implemented. I wanted to simulate adding in a service to handle internal with an agent, and verifying that all tests are still working.

Add a new interface called IBuzzHelper to the Fizzing.Buzz Project as a separate file. This interface should have a single method called BuzzCheck that takes an int and returns a string. Update the BuzzChecker class to accept the interface as a parameter in the constructor. There should only be one constructor with one parameter. Create a private instance of the interface. Update the Verify method switch return a call to BuzzCheck as the default case, leaving all logic in place. Run the tests to make sure that they fail and do not compile yet. Update the test files to handle the new interface and mock it using NSubstitute where necessary, use xUnit for tests and Shouldly for assertions. After updating ensure the solution compiles and all tests pass.

Prompt

New Interface Added:

public interface IBuzzHelper

{

string BuzzCheck(int number);

}

Updated BuzzChecker class:

public class BuzzChecker

{

private readonly IBuzzHelper _buzzHelper;

public BuzzChecker(IBuzzHelper buzzHelper)

{

_buzzHelper = buzzHelper;

}

public string Verify(int number)

{

if (number < 0)

{

throw new ArgumentException("Number cannot be negative.", nameof(number));

}

return (number % 3, number % 5) switch

{

(0, 0) => "FizzBuzz",

(0, _) => "Fizz",

(_, 0) => "Buzz",

_ => _buzzHelper.BuzzCheck(number),

};

}

}

Updated Tests:

public class BuzzCheckerTests

{

private readonly BuzzChecker _buzzChecker;

private readonly IBuzzHelper _buzzHelper;

public BuzzCheckerTests()

{

_buzzHelper = Substitute.For<IBuzzHelper>();

_buzzChecker = new BuzzChecker(_buzzHelper);

}

[Theory]

[InlineData(-1)]

[InlineData(-5)]

[InlineData(-100)]

public void Verify_ShouldThrowException_WhenNumberIsNegative(int negativeNumber)

{

Should.Throw<ArgumentException>(() => _buzzChecker.Verify(negativeNumber));

}

[Theory]

[InlineData(3)]

[InlineData(6)]

[InlineData(9)]

public void Verify_ShouldReturnFizz_WhenNumberIsDivisibleBy3(int number)

{

var result = _buzzChecker.Verify(number);

result.ShouldBe("Fizz");

}

[Theory]

[InlineData(5)]

[InlineData(10)]

[InlineData(20)]

public void Verify_ShouldReturnBuzz_WhenNumberIsDivisibleBy5(int number)

{

var result = _buzzChecker.Verify(number);

result.ShouldBe("Buzz");

}

[Theory]

[InlineData(15)]

[InlineData(30)]

[InlineData(45)]

public void Verify_ShouldReturnFizzBuzz_WhenNumberIsDivisibleBy3And5(int number)

{

var result = _buzzChecker.Verify(number);

result.ShouldBe("FizzBuzz");

}

[Theory]

[InlineData(1)]

[InlineData(2)]

[InlineData(4)]

public void Verify_ShouldReturnNumber_WhenNotDivisibleBy3Or5(int number)

{

_buzzHelper.BuzzCheck(number).Returns(number.ToString());

var result = _buzzChecker.Verify(number);

result.ShouldBe(number.ToString());

_buzzHelper.Received(1).BuzzCheck(number);

}

}

Finally Done

After running through the process, I have worked with the agent to create a new feature, with plenty of unit tests. The majority of the actual work has been done by the agent, but the thinking (what thinking there was) by me. I had quite a few learnings from this process:

- In Visual Studio, with Gemini 2.5, the context either gets too big too fast or has some wonky implementation at the moment. I found it useful to start from 0 for each prompt.

- Due to no context, the prompts had to be much more specific or the Agent would deviate. In the past I have found including an instructions file in the context helps with this. That is out of the scope of this experiment.

- For trivial tasks like this, it is a long process, but I can see the usefulness for a developer who knows what they want out of a method but does not yet have an idea of how to implement it.

- The agent doesn’t always listen 100%. If you notice, I told it to use Arrange Act Assert and did not do so, though it did create the tests. The lesson there is that you should watch it very carefully or you may have to re-do your screen captures (which I’m not about to do).

Conclusion

Agentic coding and “vibe coding” are a great step forward in development. As we move into a new programming paradigm, we should continue to heed the hard learned lessons of the past. Agents aren’t perfect in their delivery, like all computers they only do what they are told as they interpret them. Providing tests to validate their output can help to make the most out of this awesome power. Adding the agent mode into Visual Studio has been an awesome feature and will be great to use going forward, I’m anticipating some great things with the new tools being added.