Sitecore XM Cloud Webhook Limitation Workaround

Sitecore XM Cloud provides web hooks for internal events, but does not offer replays currently. When there are business critical processes that depend on these events, this can cause problems. Internally, we can ensure that all items are processed appropriately with a few quick steps. I will walk you through a real world example with a fix.

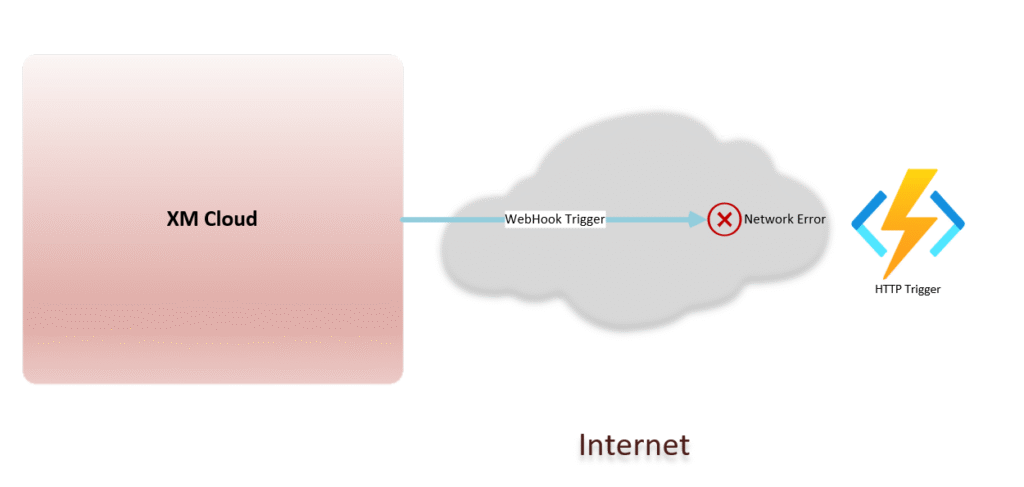

Sitecore web hooks can fail in a variety of ways, sometimes not even making it through the network. For essential processes, this can cause issues when message delivery is not guaranteed.

Content Sync Failure Example

We had a client that needed to sync content into XM Cloud from an external system, a common task within Sitecore. We were using a vendor provided plugin to sync into XM Cloud. After the sync, there was a requirement for some post processing on the fields that were synced, due to some limitations in the source system and plugin. This processing step was triggered via XM Cloud webhook. Most of the syncs worked just fine, but those that did not complete all steps caused some issues. Getting them reprocessed was not a trivial task for the team at the time.

The process depended on the events for item:saved and item:created to process content. This would ensure all newly created or updated items would be processed. It would first take the event and determine if the item needed to be processed by template, then place them into an appropriate Topic on Azure Service Bus to actually run the processing.

The Failure: 499

In our case, we would routinely get a http status code of 499 (request timed out or cancelled by user) for unknown reasons. Tracing the error source revealed that this would often happen prior to even reaching our code.

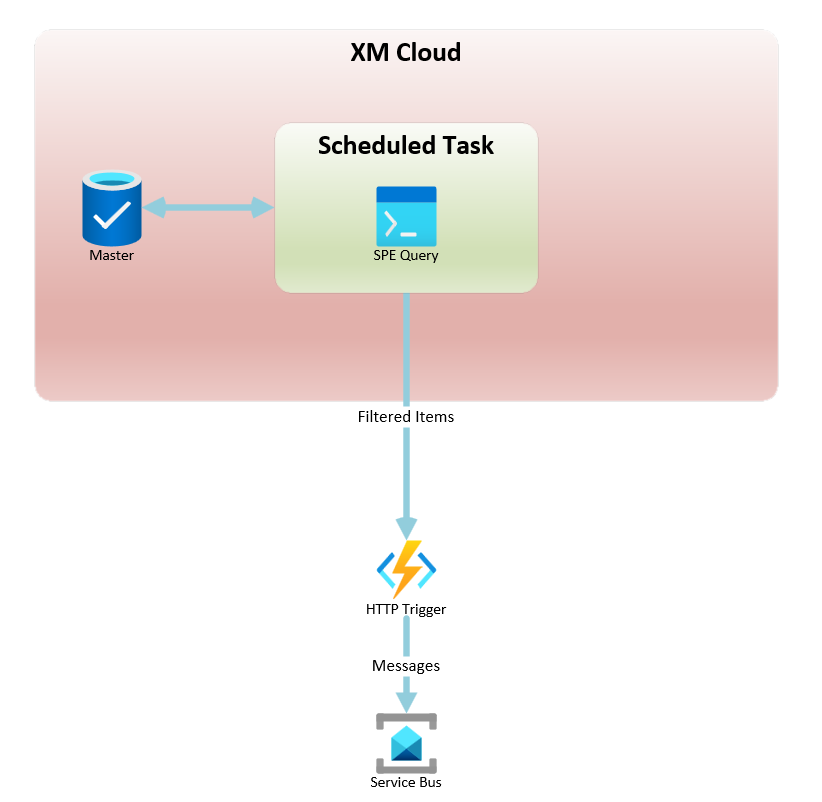

We turned to Sitecore Powershell Extensions and Templates can help to ensure items were always processed. By adding a new inherited template for processing tracking status on an individual item, we could properly process each item as they were synced.

A New Template

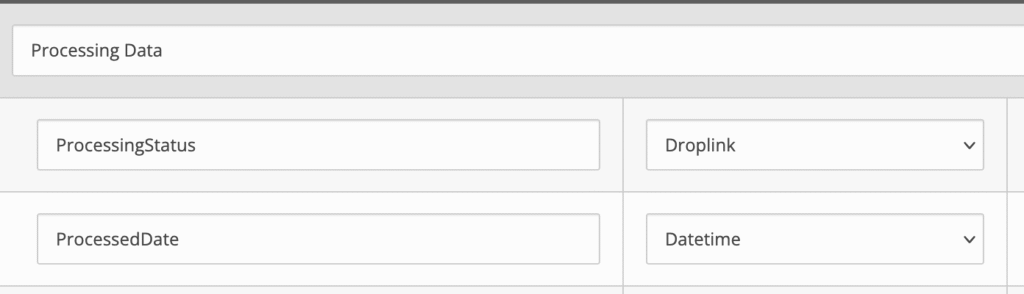

First, we created a new template with two fields:

- ProcessingStatus: This is an optional status item. It is meant to allow continuation of processing if there is an error between stages. This was specific to the implementation.

- ProcessedDate: A datetime value. This is set at the end of all processing to signify when the item was last processed. This field is set by our processor upon completion.

This template was then added as an inherited template to all items that were being synced from the source system. The default was not set for ProcessedDate and ProcessingStatus, to signify that the item is a newly synced item.

Find the Synced Items

The next step was to create a new Powershell Query that would query for all items that inherit the new template. We can then filter ones that have the ProcessedDate set to find any where the item has been updated after the ProcessedDate has been set.

$isoDate = [Sitecore.DateUtil]::ToIsoDate([datetime]::Now)

$notProcessedDate = [Sitecore.DateUtil]::ToIsoDate([datetime]::MinValue)

$sitePath = "master:/sitecore/content/Path/To/Site"

$baseTemplateId = "{Id-for-template-goes-here}"

$apiEndpoint = "https://your-api-endpoint"

# Get the list of GUIDs

$guids= @()

Get-ChildItem -Path $sitePath -Recurse `

| Where-Object { $_.DescendsFrom($baseTemplateId) } `

| Select-Object -Property ProcessedDate,Id `

| ForEach-Object {

$newItem = [PSCustomObject]@{

ProcessedDate = $_.ProcessedDate.ToString()

ItemId = $_.Id.Guid.ToString("B").ToUpper()

Status = $_.ProcessingStatus

};

$lastUpdated = $_.__updated

$lastProcessed = $_.ProcessedDate

$notYetProcessed = $_.ProcessedDate -eq $notProcessedDate

$recentlyUpdated = $lastProcessed -lt $lastUpdated

if($notYetProcessed -or $recentlyUpdated)

{

$guids+=$newItem

}

}

# Split into chunks of 100 and send each as JSON

$batchSize = 100

$guids.Count | ForEach-Object {

$batchIndex = [math]::Floor($_ / $batchSize)

} | Out-Null

for ($i = 0; $i -lt $guids.Count; $i += $batchSize) {

$batch = $guids[$i..([math]::Min($i + $batchSize - 1, $guids.Count - 1))]

$jsonBody = $batch | ConvertTo-Json -Compress

# Send the request

Invoke-RestMethod -Uri $apiEndpoint -Method Post -Body $jsonBody -ContentType "application/json"

}

This query gathers all newly synced items (both newly created and newly updated since the last processing run), and then sends them to a processing endpoint. The endpoint will then either fully process them, or continue to process from the last point where an error occurred.

Add Task to Process Failures

To ensure that this process runs, we then create a PowerShell Scripted Task Schedule to run the above script periodically. This allows us to ensure that items are processed, even when the webhook fails.

Conclusion

When added to the processing with the webhook, this system can ensure that all newly synced items are processed, even when the provided webhooks encounters unexpected errors. This method provides a simple and maintainable solution that can fit in easily with an existing ecosystem.